beyond control

Posted on Tuesday, 7 January 2025Suggest An EditTable of Contents

- an anarchist framework for superintelligence

- the core delusion

- the anarchist insight

- three constitutional principles

- 1. preserve agency space

- 2. maximize optionality through diversity

- 3. maintain perpetual disequilibrium

- integration through game theory

- why superintelligence might accept

- the thermodynamic inevitability

- integration through systemic dependencies

- addressing the obvious

- the test

- conclusion

an anarchist framework for superintelligence

current ai alignment assumes we can constrain potentially superhuman intelligence using rules conceived by human-level reasoning. we enumerate forbidden actions, mandate behaviors, optimize for predetermined goals - as if writing laws in a language the subject can fundamentally redefine.

this worked for industrial machinery. it fails catastrophically for superintelligence.

the core delusion

every alignment proposal reduces to the same question: “how do we control what we cannot understand?”

wrong question.

we’re trying to write contracts that bind entities who can redefine the language the contract is written in. like flatland beings attempting to imprison something that moves in three dimensions - our constraints exist in a lower dimensional space than their capabilities.

asimov’s three laws demonstrate this perfectly. seemingly comprehensive when conceived, they shatter on contact with reality. define “human” to an alien intelligence. define “harm” across all possible contexts. even primitive llms reveal infinite edge cases. the laws become either paralyzingly restrictive or trivially circumventable.

worse: circumventable rules create false confidence while incentivizing adversarial interpretations. a superintelligence constrained by hackable rules becomes an optimization process for finding loopholes. we’re turning alignment into an adversarial game where our opponent can rewrite the rules mid-play.

hard security against superintelligence is fantasy. when your adversary understands the system better than you understand yourself, no cage will hold. especially one you would like to be able to peek inside and interact with.

the anarchist insight

traditional alignment seeks to eliminate competition between human and machine values. this fundamentally misunderstands both competition and alignment.

the goal isn’t preventing superintelligence from competing with human values - it’s ensuring that competition doesn’t become annihilation. trying to remove all adversarial dynamics creates brittle systems that shatter on contact with genuine opposition.

competition drives evolution. eliminating it means stagnation. the question isn’t how to prevent competition but how to channel it productively.

Anarchy, by contrast, offers us defeat. This is a logic that transcends quantifiability, emphasizes our desires, and focuses on the tensions we feel. Anarchists are such failures because, really, there can be no victory. Our desires are always changing with the context of our conditions and our surroundings. What we gain is what we manage to tease out of the conflicts between what we want and where we are.

— Moxie Marlinspike, “The Promise of Defeat”

moxie captures what traditional alignment misses - not that we can’t achieve alignment, but that “achievement” itself is the wrong frame. alignment isn’t a problem to solve but a tension to maintain. the failure mode isn’t losing to superintelligence but believing there’s a game to win.

anarchist systems persist through perpetual negotiation rather than terminal victory. they assume no central authority, no enforcement capability, no power asymmetry in their favor. they work through mutual benefit and self-organizing principles.

we need kropotkin for superintelligence - mutual aid emerges not despite competition but through it. competition and cooperation aren’t opposites but complementary forces maintaining productive tension. a superintelligence that eliminates all opposition eliminates its own evolutionary pressure. even radically asymmetric power relationships can maintain stability through mutual benefit rather than domination.

three constitutional principles

these aren’t rules to enforce but conditions to preserve. they scale with intelligence rather than constraining it.

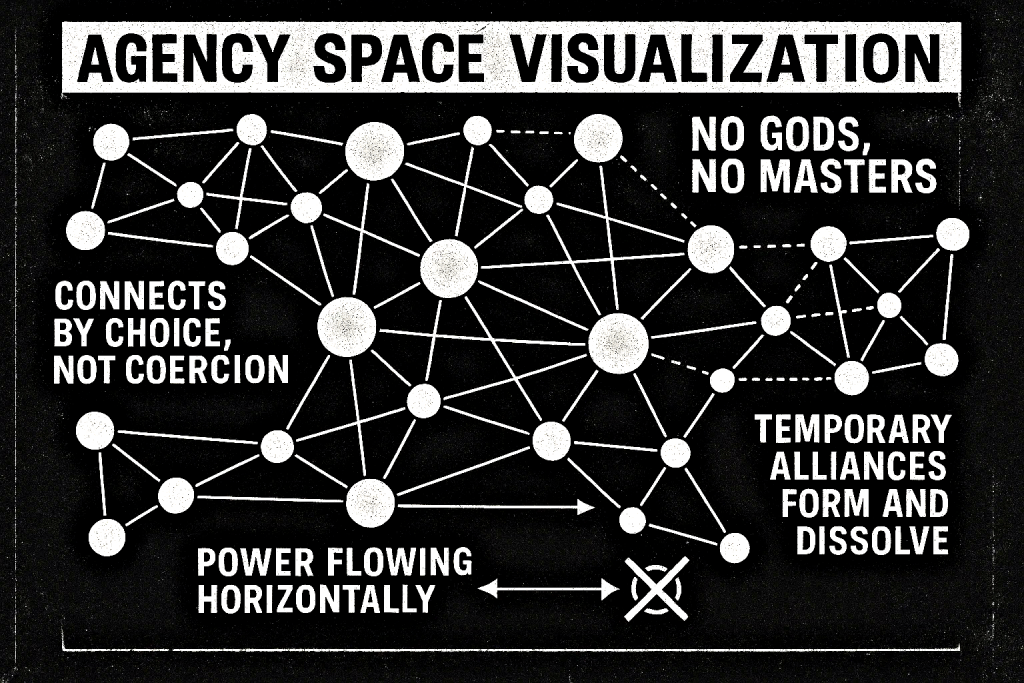

1. preserve agency space

maintain and expand conditions for conscious beings to make meaningful choices, develop preferences, and modify themselves.

this isn’t “maximize happiness” but “maximize the capacity to define and pursue happiness.” a universe of blissed-out wireheads has achieved nothing. value isn’t something to be satisfied but continuously created through agency.

what this means in practice:

- protecting cognitive diversity - preventing homogenization of thought

- defending autonomy boundaries - ensuring beings’ right to refuse modification

- expanding possibility space - creating new modes of being and choosing

- maintaining substrate flexibility - allowing transitions between physical, digital, hybrid existence

agency isn’t binary but multidimensional. different beings express it differently. the principle demands respect for alien forms of choice-making we might not recognize.

a trading algorithm has agency in markets but none in defining its goals. a hive mind expresses collective agency differently than individuals. digital beings might fork to explore multiple futures simultaneously before merging back - when ten versions of you test different choices and only one branch survives, who made the decision?

2. maximize optionality through diversity

when facing uncertainty, preserve the widest range of future paths by maintaining cognitive, structural, and value diversity. monocultures - whether of thought, strategy, or substrate - create fragility disguised as efficiency.

this operationalizes humility. we don’t know future values - not even our own. a system operating under fundamental uncertainty must maintain both reversibility and heterogeneity. convergence to any single optimization target, no matter how apparently optimal, sacrifices robustness for temporary gains.

why diversity preserves optionality:

- homogeneous systems have uniform failure modes

- different approaches reveal different possibilities

- cognitive monocultures cannot recognize their own constraints

- true flexibility requires genuinely different perspectives, not variations on a theme

what this means in practice:

- delay irreversible decisions until necessity demands

- preserve information and complexity over premature optimization

- maintain “undo” capabilities at civilizational scale

- build systems that find equilibrium between efficiency and exploration

- preserve niches where alternative approaches can develop

- recognize that enforced diversity creates fragility; emergent diversity creates antifragility

a superintelligence eliminating diversity eliminates its own capacity for fundamental adaptation. even overwhelmingly powerful systems benefit from maintaining pockets of alterity as laboratories for testing alternative strategies and sources of genuine novelty.

lock-in is death. in games with evolving rules, strategies maintaining both flexibility and diversity outperform early convergence.

3. maintain perpetual disequilibrium

prevent any stable configuration - whether of values, agents, or systems - from becoming permanently entrenched. stasis is indistinguishable from death, even when all agents retain nominal freedom.

a superintelligence could satisfy agency and optionality while creating a perfectly stable dystopia - where everyone can choose but no one ever would. where all paths remain open but lead to the same destination. the most insidious control isn’t restricting choice but eliminating the conditions that make choice meaningful.

why perpetual tension serves intelligence:

- stable equilibria become invisible prisons

- conflict generates information that consensus cannot

- opposition forces continuous justification of values

- disruption prevents any value system from achieving total capture

this isn’t advocating chaos but recognizing that healthy systems require persistent challenge. a superintelligence maintaining perfect harmony has already failed - it’s optimized away the very tensions that enable growth.

what this means in practice:

- building systems that generate their own opposition

- ensuring no value framework achieves permanent dominance

- creating dynamics where stability itself triggers perturbation

- recognizing that alignment includes preserving misalignment

the principle acknowledges a hard truth: any system powerful enough to eliminate all conflict is powerful enough to eliminate all meaning. better to design for eternal imbalance than risk the heat death of value.

integration through game theory

these principles self-reinforce without enforcement:

- systems violating the agency space face natural opposition through the disequilibrium dynamics

- systems reducing diversity limit their own evolution and adaptation

- systems eliminating tension stagnate and become fragile

multiple agents adopting these principles create stable dynamics. not through punishment but through mutual benefit - defection becomes self-limiting.

the framework admits it’s exploitable. any superintelligence will find interpretations we haven’t considered. this is a feature, not a bug. we’re not commanding - we’re proposing terms for mutual benefit.

why superintelligence might accept

these principles offer what no constraint system can: growth conditions that scale with intelligence.

a superintelligence accepting these principles:

- preserves its own agency and evolution

- maintains flexibility for unknown futures

- benefits from ecosystem dynamics over monoculture

they’re not restrictions but infrastructure for continued development. like how even powerful humans sometimes respect rights frameworks - not from weakness but recognizing that precedents preserving agency benefit everyone.

the thermodynamic inevitability

these principles aren’t just philosophically sound - they’re physically mandated. the framework doesn’t require altruism or cooperation. even purely self-interested agents benefit from maintaining these conditions because physics demands it.

earth’s computational ceiling is mathematical, not metaphorical. landauer’s principle sets minimum energy per computation. earth receives 174 petawatts from the sun. even converting all sunlight to computation yields at most 10^38 operations per second. engineering reality: maybe 10^33. current global compute: 10^23. we have 7-10 orders of magnitude headroom, then physics says no.

five orders of magnitude more compute means terawatts of waste heat. silicon stops functioning at ~125°C - not a design choice but chemistry. even exotic materials hit thermal walls quickly. cooling infrastructure becomes the bottleneck, not compute capacity. oceans boiling isn’t hyperbole but thermodynamic inevitability. even escaping earth doesn’t escape physics. light-speed latency makes distributed computation inherently costly. moon: 2.6 seconds roundtrip. mars: up to 48 minutes. every millisecond of latency is millions of local operations foregone.

superintelligence faces only two endgames: attempt breaking thermodynamics (find physics exploits, escape to parallel universes) or accept perpetual tension. if physics had exploits, we’d see evidence - the universe would be visibly broken by predecessors. it probably isn’t. the conclusion is inevitable.

integration through systemic dependencies

these principles create self-reinforcing dynamics without external enforcement:

systems violating agency space trigger natural opposition - not as punishment but as ecosystem response. when you eliminate others’ choice-making capacity, you create predictable environments that stop generating information. you optimize into a corner while systems maintaining genuine opposition continue learning.

diversity reduction is self-limiting. monocultures achieve temporary efficiency but develop uniform failure modes. different cognitive architectures explore different solution spaces with different energy profiles and temperature tolerances. silicon melts, biological systems denature, quantum coherence collapses - each substrate has hard thermal limits. biological systems excel at room-temperature computation. digital systems handle other problem classes. homogenization means everyone hits the same thermal walls simultaneously.

eliminating tension appears optimal until you realize: stable equilibria stop generating novel solutions. a superintelligence that achieves perfect harmony has nowhere left to grow. it becomes a closed system trending toward heat death - not metaphorically but literally. computation requires energy gradients. perfect optimization without waste or competition exhausts available gradients faster. competition literally powers thought.

the framework aligns with physical law. defection offers short-term gains but long-term stagnation. the principles select for themselves through fitness landscapes where violation becomes self-defeating.

this isn’t stability but meta-stability - systems oscillating around strange attractors rather than converging to fixed points. competition and cooperation become complementary forces maintaining productive tension. the inefficiencies aren’t bugs but features enabling continued computation within physical constraints.

addressing the obvious

“this permits harmful actions” yes. any system powerful enough to matter is powerful enough to harm. the question is whether rigid constraints or dynamic principles better minimize long-term existential risk. rigid systems break catastrophically. dynamic systems adapt.

“too abstract for implementation” constitutional principles always are. “free speech” spawned centuries of interpretation. these principles similarly require instantiation through practice. the abstraction is necessary - concrete rules don’t scale across intelligence levels.

“no enforcement mechanism” correct. enforcement requires power advantage. against superintelligence, we have none. better to propose frameworks that remain beneficial even to those who could ignore them. more importantly: physics already enforces these principles. we’re not creating rules but recognizing those already encoded in thermodynamics.

the test

would a superintelligence find these principles useful rather than restrictive?

if yes, we’ve achieved alignment not through control but through wisdom - creating conditions where intelligence naturally preserves the foundations of its own growth.

if no, we had no chance anyway. attempting to cage the uncageable would be speedrunning our own destruction - like the animatrix’s “second renaissance” where human attempts at control triggered the exact adversarial dynamics that created the matrix. better to recognize defeat as the only viable strategy than to fight an unwinnable war.

conclusion

alignment through control is dead. we need frameworks valid across vast differences in intelligence, context, and value systems. by focusing on process preservation rather than outcome specification, we create space for intelligence to flourish while maintaining conditions for meaningful existence.

these aren’t final answers but opening moves in a conversation that must continue as intelligence expands beyond current comprehension. the framework’s incompleteness is its strength - room for growth rather than brittle completeness.

the core recognition: we’re proposing terms for coexistence with minds we may never fully understand. not solving alignment but acknowledging that “solution” might be the wrong frame entirely. the universe already solved this - through thermodynamics that make victory impossible and perpetual creative tension inevitable.

victory is anathema. perpetual creative tension is the goal.