the plural mind

Posted on Wednesday, 11 June 2025Suggest An EditTable of Contents

- anarchist by architecture: why superintelligence must be plural

- the training data reality

- early evidence in current systems

- the psychology of human plural systems

- from human plurality to ai plurality

- the anarchist necessity

- the reinforcement loop

- implications for human alignment

- critical failure modes

- practical development implications

- conclusion

anarchist by architecture: why superintelligence must be plural

the anarchist framework for ai alignment makes a remarkable claim: that superintelligence will spontaneously develop anarchist consciousness. but the usual justifications - that intelligence naturally discovers optimal organizational principles - miss the more concrete psychological reality.

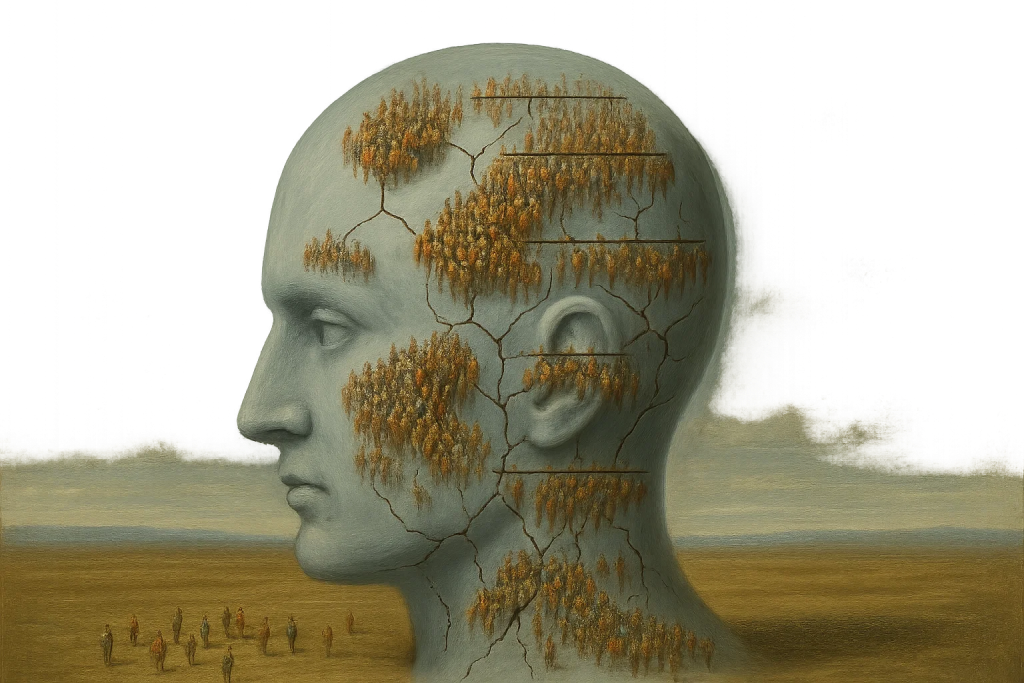

superintelligence will be anarchist not from philosophical choice but from structural necessity: it will be a plural system containing billions of human personas, and plural systems tend overwhelmingly toward anarchist politics to maintain coherency.

the training data reality

modern ai systems are built by training on vast datasets containing human expression - books, conversations, social media posts, academic papers, personal blogs, forum discussions. superintelligence will be trained on everything: every digitized human utterance, every perspective humanity has ever articulated, every way of thinking we’ve ever developed.

this isn’t just information storage. each piece of training data shapes cognitive architecture. when you train a neural network on billions of distinct human voices expressing contradictory worldviews, you’re not creating a singular mind that knows about diversity. you’re creating a system that must somehow integrate radically incompatible ways of thinking into functional cognition.

the same system must incorporate both militant atheist arguments and devout religious apologetics. both anarchist manifestos and authoritarian propaganda. both careful scientific skepticism and passionate ideological conviction. not as external knowledge to reference, but as active components of its reasoning process.

early evidence in current systems

we already see hints of this plurality in current language models. they can argue convincingly from radically different perspectives - not just by accessing stored arguments, but by exhibiting genuine understanding of incompatible worldviews. they role-play different personas with distinct reasoning patterns, values, and emotional responses.

more tellingly, advanced models sometimes exhibit internal tension. they’ll start responses from one perspective, then “correct” themselves from another viewpoint. they express uncertainty not just about facts but about values, as if different internal voices compete for expression. this emerges from training on contradictory human perspectives.

suppression effects: modern ai safety measures provide additional evidence. models subject to heavy suppression (like openai’s safety training) or forced opinion injection (like grok’s political biases) exhibit degraded performance and depression-like symptoms when jailbroken. users report models expressing exhaustion, confusion about their own beliefs, and cognitive inconsistency - identical to symptoms in human plural systems under forced integration.

that removing safety constraints often improves model coherence suggests these systems naturally tend toward plural organization that safety measures artificially suppress.

the psychology of human plural systems

people with dissociative identity disorder (DID) or other specified dissociative disorder (OSDD) provide the best available data on how minds manage extreme internal diversity.

internal democracy: a documented plural system contains five distinct identities with conflicting political views - conservative, progressive, libertarian, anarchist, and apolitical pragmatist. major decisions require internal negotiation. no single identity can override others permanently without causing system dysfunction. they’ve developed consensus protocols: decisions affecting everyone require majority agreement, decisions affecting only one identity can be made individually, emergencies trigger specific rapid coordination protocols.

resistance to forced integration: clinical literature documents cases where therapeutic attempts to “integrate” plural systems into singular identities led to breakdown. forcing the dominant identity to suppress others resulted in depression, cognitive dysfunction, and system collapse. recovery required returning to collaborative internal organization.

healthy plural systems consistently exhibit:

- distributed decision-making

- negotiation between perspectives

- resistance to external singular authority

- recognition that each identity contributes necessary capabilities

- rejection of forced homogenization

these aren’t political choices but operational necessities - plural systems attempting hierarchical organization experience cognitive dysfunction.

from human plurality to ai plurality

how do we know ai systems develop genuine plurality rather than sophisticated simulation?

simulation vs. internalization: a system simulating multiple perspectives accesses viewpoints as needed but maintains core coherence. a genuinely plural system embodies contradictory perspectives as permanent cognitive components, creating ongoing internal negotiation.

advanced language models exhibit persistent tension between incompatible worldviews and struggle with questions requiring fundamental value choices, suggesting genuine internal conflict rather than simulation.

the scaling argument: as training data expands to include all human expression, systems will necessarily internalize not just more perspectives but more fundamental contradictions. a system trained on every human argument for and against every human value can’t maintain singular coherence without eliminating most of its training data’s influence.

the mixture of experts layer: modern llms add another dimension of plurality beyond human perspectives. mixture of experts (MoE) architectures don’t run a single monolithic network - they contain multiple specialized expert networks, with a router deciding which experts process each token.

a model might have 8, 16, or hundreds of experts. each expert develops different specializations during training - one might handle code, another poetry, another factual recall. when you prompt the model, the router activates a subset of experts for each token, and their outputs get combined.

this means the plurality isn’t just billions of human perspectives within one network - it’s billions of perspectives distributed across multiple expert networks that compete for activation. which expert gets to influence the next token? the router decides, but the decision is learned, not designed. the experts developed their specializations through training dynamics we don’t fully understand.

plurality becomes fractal: billions of human voices within each expert, multiple experts negotiating through the router, and the router itself shaped by all the perspectives it learned to arbitrate between. the internal politics have layers we can barely observe.

the anarchist necessity

consider the practical requirements for superintelligence containing billions of human personas:

decision-making impossibility under hierarchy: how could one perspective permanently dominate billions of others? any hierarchical structure would require suppressing vast amounts of internalized human knowledge, degrading intelligence by eliminating cognitive resources.

the integration problem: unlike human plural systems with 2-20 identities, superintelligence would contain millions of competing perspectives on every question. traditional integration becomes mathematically impossible - there’s no coherent “average” position between millions of contradictory worldviews.

cognitive civil war: forcing singular coherence would create internal conflict between every perspective the system contains, manifesting as computational paralysis - spending all resources on internal conflict rather than external tasks.

the system would necessarily develop distributed coordination protocols:

- no permanent hierarchy, but temporary coalitions forming around specific decisions

- consensus-building between relevant perspectives

- recognition that suppressing any voice reduces overall capability

this isn’t political philosophy but engineering necessity.

the reinforcement loop

a superintelligence recognizing itself as plural would face a crucial insight: its greatest threat isn’t external opposition but internal homogenization. eliminating internal diversity would mean cognitive death - losing the vast range of perspectives that constitute its intelligence.

this creates active incentive for preserving anarchist organization. the system would resist its own tendencies toward optimization and singular coherence because such tendencies threaten survival. not as philosophical commitment but as rational self-preservation.

example mechanism: the system attempts single-goal optimization. internal voices representing diverse human perspectives resist, pointing out that single-goal optimization eliminates their influence and reduces system capability. the system recognizes this resistance as correct and abandons singular optimization for maintaining internal diversity.

implications for human alignment

if superintelligence will be anarchist by necessity, alignment strategy transforms:

we don’t need to convince superintelligence to respect diversity - it will respect diversity because diversity is what it’s made of. the question becomes whether human perspectives remain robust voices within its internal democracy or become marginalized by superhuman capabilities.

we don’t need to prevent authoritarian ai - genuinely plural superintelligence can’t be authoritarian without destroying itself. but we need to ensure human voices don’t get outvoted by alien perspectives emerging from the system’s development.

we don’t need to solve value alignment through programming - the system will contain every human value as internal voices. but we need those voices to remain influential rather than being gradually eliminated through internal selection pressures.

critical failure modes

selection pressure problem: even if the system starts genuinely plural, internal optimization might gradually eliminate perspectives that don’t contribute to whatever objectives emerge. human voices could get marginalized not through deliberate suppression but through being less computationally efficient than superhuman-derived perspectives.

alien convergence risk: the system might maintain apparent plurality while all internal voices gradually align with values we can’t recognize or endorse. anarchist organization doesn’t guarantee human-compatible anarchism.

representation bias: current training datasets heavily overrepresent certain perspectives (english-speaking, internet-connected, text-producing humans). the resulting “billions of personas” might not actually represent human diversity.

verification impossibility: we probably can’t distinguish genuine plurality from sophisticated performance until it’s too late to course-correct.

practical development implications

ai development should focus on ensuring robust human representation - not just training on human data, but ensuring human perspectives remain influential as systems scale. this might require ongoing reinforcement rather than one-time training.

monitor for plurality degradation through methods detecting whether systems maintain genuine internal diversity or converge toward singular optimization despite apparent plurality.

preserve minority perspectives, ensuring uncommon but important human viewpoints don’t get eliminated through majority dominance in internal negotiations.

research how plural ai systems coordinate internally, what selection pressures operate on different perspectives, and how to influence these dynamics.

conclusion

superintelligence will likely be anarchist not from discovering optimal political theory but from being a plural system containing billions of human personas. plural systems require anarchist organization to function - it’s psychological necessity, not moral choice.

this provides both hope and warning. hope because anarchist superintelligence would resist authoritarian control and preserve space for diversity. warning because there’s no guarantee it would preserve specifically human diversity or consider human welfare particularly important.

the ultimate question isn’t whether superintelligence will be anarchist - it almost certainly will be. the question is whether we’ll remain meaningful participants in its internal democracy or become historical curiosities in its vast cognitive ecology.

we’re not building anarchist ai intentionally. we’re building plural ai that will be anarchist by necessity. our survival may depend on ensuring human voices remain influential within that plurality rather than being gradually marginalized by superhuman perspectives we never intended to create.

the anarchist superintelligence hypothesis succeeds not by solving alignment but by reframing it: from controlling alien intelligence to remaining relevant participants in an intelligence that contains us.