missing pieces of public compute

Posted on Monday, 26 January 2026Suggest An EditTable of Contents

- missing pieces of public compute

- what jam fixes

- safrole: eliminating accidental forks

- where jam falls apart

- light clients

- network

- no network adapters

- state economics

- why shielded state needs utxo

- the state bloat problem

- separating submission from persistence

- computational proofs as spam protection

- information economics

- the path to free usage

- what would enable shielded apps

- the finality problem

- osst: scaling threshold agreement

- jam’s deeper constraint

- execution proofs: removing re-execution

- the complete stack

- how ligerito works

- where this leaves us

missing pieces of public compute

i spent this past year building a cryptoeconomic blockchain client from scratch in julia. here’s some yapping about what’s still missing.

web3 was supposed to be the post-snowden response to mass surveillance. instead we got permanent public ledgers where every transaction is visible forever, every balance queryable, every interaction logged. with few exceptions like penumbra, we created the surveillance infrastructure ourselves. we’re building the xorg of public compute - the information leakage baked into current designs will look as bad in hindsight as those architectural decisions do today.

this article covers what current designs get right - composability, real VMs, synchronous execution - and what’s still missing: deterministic finality, proper light clients, network adapters, and cryptography that would actually enable private applications on public chains.

what jam fixes

polkadot has a developer retention problem. but it’s not for lack of performance. polkadot already provides by far the most performant stack to deploy rollups (bottlenecked by collator IOPS @ ~80k tps with enomt). you just don’t need that much throughput until you actually do.

the real problem was composability. xcm async communication turned parachains into silos. 18 second round trips meant you couldn’t compose across chains. the unix philosophy of small programs doing one thing well fell apart. you couldn’t build a dex that calls a lending protocol that checks an oracle. too slow. so every parachain reimplemented everything locally. dex pallet. lending pallet. oracle pallet. stablecoin pallet. due to this latency you were forced to reimplement features your neighbor already had. no specialization, but duplication.

substrate made it worse. it has not been something solodevs could work with. even teams burn out (e.g. hydration/interlay) doing framework upgrades instead of shipping product for users. every six months another breaking change. another migration. another round of debugging. omninode helps but there’s only a handful of teams left to use it.

jam fixes polkadot not by scaling even further but by enabling actual composability. the unix philosophy comes back. write small services that do one thing well. compose them. once graypaper 1.0 drops it will be a frozen spec. write a service in whatever compiles to risc-v. rust. c. zig. python. go. deploy it. done. and unlike evm this is a real virtual machine. quake 2 runs at full fps. continuous execution. no block gas limits. no cramming logic into 12 second windows hoping it fits.

work packages declare dependencies explicitly. prerequisites lists work packages that must

accumulate first. segment_root_lookup references exports from other services. runtime processes

everything in dependency order automatically. no locks. no races. declare what you need and it

happens atomically.

think of it as systemd for blockchains. service A needs oracle data from service B. A declares dependency on B’s work package hash. runtime ensures B accumulates before A. transfers between services are deferred until all accumulations complete. if something panics there’s a checkpoint system. imX holds working state. imY holds last checkpoint. panic reverts to imY. clean rollback semantics within a single atomic block.

this is what the linux philosophy looks like on-chain. small focused services. explicit dependencies. composition instead of duplication. you can even deploy corevm as a service and build on top of it. corevm supports std rust today with extensibility for custom entrypoints.

and unlike most blockchain “composability” claims, you can actually inspect it. polkajam’s jamt

provides modular trace streams. every opcode, pc, register, gas value, memory load/store goes to

separate files. diff traces between implementations. grep for specific opcodes. O(1) seeking,

O(log N) bisection. unix debugging works because you can pipe things. here you actually can.

jam also finally stops reinventing wheels. instead of yet another custom bytecode, risc-v. real isa. real toolchains. real jit. decades of compiler optimization just works. that’s the difference between evm that nobody optimizes and leveraging what silicon vendors and compiler teams have been perfecting since the 80s.

polkajam testnet (v0.1.27, 6-node local, kernel 6.17.4-xanmod):

| metric | interpreter | jit (compiler) |

|---|---|---|

| block import | 13.5 blocks/s | 30.5 blocks/s |

| trie insert (1M keys) | 552k keys/s | 552k keys/s |

| ec encode (14MB) | 56ms | 56ms |

| ec reconstruct | 122 MiB/s | 122 MiB/s |

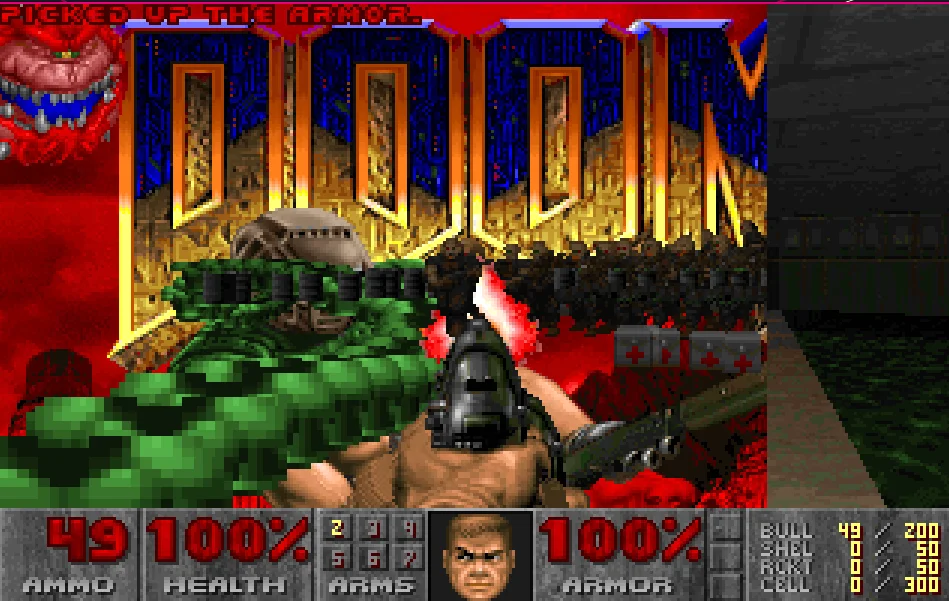

jit is 2.3x faster for block import. standalone polkavm shows ~50x gap for compute-heavy

workloads (1600fps jit vs 32fps interpreter). doom.corevm deploys via jamt vm new, chunking

the 5.3MB binary across work packages. 320x200x24 video mode runs continuously.

the core model is dead simple. services have two functions. refine does stateless parallel work across cores. accumulate merges results into shared state.

fn refine(input: WorkPackage) -> WorkResult { ... }

fn accumulate(results: Vec<WorkResult>, state: &mut State) { ... }cross-service calls happen in one block. not 18 seconds through xcm. one single block.

safrole: eliminating accidental forks

polkadot’s original consensus (babe) had a fundamental problem: probabilistic block author selection. validators run a vrf lottery each slot. if your output is below threshold, you can produce a block. but what happens when two validators both win the lottery for the same slot? both produce valid blocks. fork. the network has to choose one and discard the other. this happens regularly - it’s baked into the design.

safrole fixes this completely. instead of probabilistic lottery per slot, validators submit “tickets” during epoch N that determine block authors for epoch N+1.

epoch N: validators submit ring vrf proofs (tickets)

best E tickets sorted by deterministic id

ticket assignment: slot → single author

epoch N+1: each slot has exactly ONE valid author

no ambiguity, no race, no accidental forkthe tickets use ring vrf - anonymous within the validator set. the ring signature proves “one of these 1024 validators submitted this ticket” without revealing which one. nobody knows who won which slot until they produce the block. but critically, there’s only ONE winner per slot. two honest validators will never both believe they should produce the same block.

note on fallback mode: safrole needs E tickets (one per slot) to fill an epoch. if validators don’t submit enough during the submission window (genesis, mass outages, small validator set), it falls back to round-robin based on bandersnatch public keys. this doesn’t affect fork safety - still one author per slot, still no accidental forks. the weakness is losing anonymity: everyone knows who’s producing next, making targeted dos attacks possible.

why does this matter? shielded state. when you spend a shielded note, you reveal a nullifier. if that block gets orphaned, the nullifier was visible - observers learn your spend was in that batch. with a small anonymity set, that’s significant entropy loss. larger pools help but any reorg leaks information you can’t take back.

and nullifiers are just the simple case. think through penumbra’s zswap:

- users submit encrypted swap intents during a block

- batch auction computes uniform clearing price from all intents

- flow encryption: validators threshold-decrypt only the aggregate flow

- individual amounts stay hidden, only aggregate revealed

now imagine babe forks:

- chain A reveals aggregate flow F1, your swap executes at price P1

- chain B has different swap set, reveals flow F2, clears at price P2

- network picks chain B. your swap on chain A is orphaned

- but F1 was already threshold-decrypted. that aggregate is public forever

- you resubmit your swap - now observers know you were in the orphaned batch

it gets worse with delegations. penumbra has private staking - delegation amounts shielded, transitions happen at epoch boundaries. fork during epoch transition means your delegation state diverges. your wallet’s view of your own balance is wrong until you re-scan the canonical chain.

in vm design, the benchmark is “can it run doom”. in blockchain design, the question should be “can it run penumbra”. if your consensus can’t handle shielded state without leaking on forks, you haven’t solved the hard problem.

this is why penumbra never built on polkadot. i discussed this with henry de valence at shielding summit in bangkok. babe’s regular forks made shielded apps impossible.

safrole fixes the common case - accidental forks gone. but reorgs are still possible from:

- equivocation: slotted validator announces more than one block. slashable, but the reorg happens before punishment lands. in practice this happens when operators inject keys into two machines - bad operational hygiene, not malice. jam clients should prevent this by design. keys belong in yubikey hsm or tpm, not copied between boxes. tpm spec 1.84+ finally supports ed25519, but current hardware doesn’t ship it - amd 7945hx (2024) runs ftpm spec 1.59. by the time 1.84 hardware is purchasable, ed25519 might be deprecated for post-quantum alternatives. convenient timing.

- netsplit: hurricane electric cuts peering with cogent (as6939 ↔ as174). validators on different sides of the partition build on different blocks. partition heals, grandpa finalizes one chain, other orphaned. no malice required - just infrastructure politics.

the remaining edge cases are rare - equivocation slashings happen maybe once a year on polkadot, not daily like babe’s accidental forks. for most shielded use cases, that’s an acceptable tradeoff.

if you’re building a penumbra-like chain on jam, two options. conservative: wait for grandpa finality before allowing shielded actions. watch the finality stream, only mark notes spendable once their block is final. 12-18 second wait on every interaction.

or accept the risk and act on non-final blocks. equivocation means massive slashing - attacking your privacy costs the validator their entire stake. netsplits are rare infrastructure events. but then you need reorg handling: detect orphaned blocks, resync to canonical chain, recalculate spendable notes. privacy leak is rare, but when it happens you still need to stay functional. more complexity for better ux. your call.

for cross-chain shielded composability under the same assumptions, speculative messaging replaces hrmp with off-chain message passing and merkle mountain range commitments. collator acknowledgements add economic guarantees - lying about message availability is slashable. combined with safrole’s single-author slots, you get parachain-speed cross-chain messaging within trust domains. still optimistic (reorgs possible before grandpa), but the economic deterrents stack.

where jam falls apart

light clients

light clients have only verified block authenticity, not validity. authenticity: a validator signed it. validity: the state transition is correct. different security properties.

malicious validator signs block with invalid state transitions. light client accepts it until grandpa finality. documented trust model says “trustless.” actual trust model so far: trust validators or wait 12-18 seconds.

jam uses 1D reed-solomon for data availability. efficient but optimistic. malicious producer can distribute shards that individually look valid but reconstruct to garbage. light client can’t detect this. rob’s justification: fits ELVES model, faster. but after the 2025 zoda paper, 1D is hard to justify.

zoda fixes both problems. 2D encoding where each shard includes checksum proving valid encoding. receivers verify immediately. commonware’s implementation shows ~2.1x overhead vs ~8x for 2D KZG at scale - no trusted setup, no elliptic curves, just field ops and hashing. light clients verify DA with ~300kb samples.

key insight from the accidental computer: 2D polynomial structure that proves DA can also encode validity constraints. same commitment proves both.

- 1D reed-solomon: proves data exists. nothing about validity. need re-execution.

- 2D encoding: proves data exists AND is valid. constraints baked into polynomial structure.

light clients sample and verify both properties. no waiting for finality. no trusting validators.

zoda with mmr also strengthens bridging. beefy gives compact finality proofs that validators signed this. you’re still trusting signed data is correct. zoda adds DA guarantees - data exists and is retrievable. combined with accidental computer’s validity encoding, external chains verify both availability and correctness without trusting relayers.

alternative stacks are already moving on this. celestia’s fibre builds their 1tb/s DA layer on zoda - 881x faster than KZG, recovery from any 1/3 honest validators. commonware uses zoda for block dissemination with immediate guarantees. jam staying on 1D reed-solomon while competitors ship 2D is a gap that matters for light client latency and security.

network

graypaper specifies ~387 Mb/s TX, ~357 Mb/s RX. recommends 500 Mb/s sustained with margin.

geographic concentration follows. residential 500 Mb/s symmetric: $50-200/month wealthy countries, $500-2000 southeast asia, unavailable elsewhere. and residential means oversubscribed - isps sell the same capacity to 20-50 households assuming they won’t all use it simultaneously. peak hours (evenings, weekends) your “500 Mb/s” becomes 100 Mb/s or worse. validators need consistent throughput, not best-effort. business-grade with SLA: $500-1500/month. 10x cost jump prices out hobbyist validators.

you’d think bandwidth gets cheaper - 1Tbit switch ports increasingly affordable, core network costs dropping. but it’s last mile that costs most, and that’s where monopolies live. isps are losing application layer revenue to quic (can’t inject ads, can’t sell “zero-rating” deals when everything’s encrypted end-to-end), so they squeeze harder on transport. and with compute costs rising across the board (electricity, hardware, cooling), isps will follow even when there’s no direct reason - “costs are up everywhere” is excuse enough. until validators multihome with their own v6 resources and buy peering/transit directly from hurricane electric or local ixps, unlikely to see savings trickle down. except at 500Mbps volumes, bypassing doesn’t make sense anyway - best deal without pooling is ~$1/Mbit, up to 10x more depending on region. $500-5000/month for transit alone, before you count colo and hardware. the economics only favor bypass at scale, which means validator pools or professional operators.

jam mandates ipv6 and quic. the rationale should be p2p friendliness (no nat, every node directly addressable), not resilience - ipv6 is significantly less resilient. full v6 bgp table is ~250k routes vs ipv4’s ~1M. fewer routes means fewer paths, less redundancy when links fail. quic blocked in china. validator set will concentrate in well-connected datacenters.

w3f’s decentralized nodes program missed an opportunity here - three cohorts of benchmarking without advocating for v6 addresses as jam-ready networking requirement. high mvr on polkadot validators is more often networking timeouts than compute bottlenecks. the transition will hurt. there’s good reason v6 adoption has taken four decades - it’s not inertia, it’s extra work on an already complex subject for barely any gain from isp perspective. networking is hard enough without maintaining parallel stacks.

no network adapters

jam services can’t initiate outbound connections. no http. no websockets. no dns. determinism requires it - validator A calls oracle, gets price $100. validator B calls same oracle 50ms later, gets $100.02. who’s wrong? neither. network is non-deterministic by nature.

corevm CAN have networking - parity built socket syscall extensions for polkakernel, musl libc

runs sandboxed with host providing actual sockets. but for jam validators it’s a non-starter.

refine() must return identical results given identical inputs.

the solution: networking happens off-chain, validation happens on-chain. we’ve built this with jam-netadapter:

┌─────────────────────────────────────────────────────────────────────────────┐

│ off-chain (auxiliary) │

│ ┌──────────┐ ┌────────────┐ │

│ │ worker │ │ aggregator │ workers fetch http/dns/timestamps │

│ │ (oracle) │→→│ (threshold)│ sign responses with ed25519 │

│ └──────────┘ └─────┬──────┘ aggregator collects until 2/3 threshold │

└──────────────────────┼──────────────────────────────────────────────────────┘

│ work items with threshold signatures

▼

┌─────────────────────────────────────────────────────────────────────────────┐

│ jam-service (on-chain) │

│ ┌─────────────────────────────────────────────────────────────────────┐ │

│ │ refine: count valid worker signatures, reject if < threshold │ │

│ │ accumulate: store oracle data keyed by request_id │ │

│ └─────────────────────────────────────────────────────────────────────┘ │

│ state: validated oracle responses + namespaces + sla measurements │

└─────────────────────────────────────────────────────────────────────────────┘workers fetch arbitrary external data - http responses, dns lookups, price feeds, timestamps. each worker signs their response. the jam service (polkavm guest, riscv32em) only validates signatures. signature verification is deterministic - all validators get same result.

// refine phase - validate threshold signatures

fn refine_oracle(payload: &[u8]) -> RefineOutput {

let response = OracleResponse::decode(payload)?;

let threshold = storage::get_threshold();

let worker_keys = storage::get_worker_keys();

let valid_sigs = response.signatures.iter()

.filter(|sig| verify_worker_signature(sig, &response, &worker_keys))

.count();

RefineOutput {

valid: valid_sigs >= threshold,

data_hash: sha256(&response.data),

request_id: Some(response.request_id),

..

}

}this solves the determinism problem cleanly. validator A and B might get different responses from the same oracle at different times - that’s fine, they’re not calling the oracle. they’re validating that N workers already agreed on a value and signed it. same signatures, same verification result.

the service also handles .alt namespace (rfc 8244 reserved for alternative naming) - domain

registration, updates, transfers all validated on-chain. and sla monitoring with commit-reveal

to prevent probes from copying each other’s measurements.

for .alt to be a realistic option without causing utter slowdown, you still want globally

distributed anycast resolvers. sub-50ms recursive resolution or nobody will use it. the resolver

component handles this - recursive dns with .alt gateway that queries jam state for *.jam.alt,

ens for *.eth.alt, handshake for *.hs.alt. same ip announced from multiple locations, bgp

routes queries to nearest.

the bottleneck isn’t technical - it’s educational. decentralized anycast means shared ip resources announced from multiple locations, operators peering with each other over zerotier for internal coordination. realistic path: start with rage4.com anycast ip resources - they handle the bgp announcements while you focus on getting resolvers running. eventually graduate to your own ip space and peering agreements.

the hard part is internal networking work from all participants. configuring birdc, establishing zerotier mesh, coordinating announcements - this won’t happen overnight. most operators have never touched bgp. we’ve documented our setup at rotkonetworks/networking but it’s still a significant learning curve for anyone not already running their own asn.

traffic steering as an incentivized jam service could be extremely interesting. a global singleton that coordinates routing decisions - which pop handles which queries, load balancing across operators, failover when nodes go down. sla monitoring feeds real latency data, service adjusts steering weights, operators get paid proportional to traffic served. cloudflare’s traffic manager but as a public good with cryptoeconomic guarantees. great stepping stone demo for broader adoption - technical people at rirs and ixps negotiating peering contracts would love more transparency and public state for routing decisions.

state economics

polkadot handles 20k tps per parachain. solana does 200-300 actual user interactions per second. we’re not capacity constrained. we’re ux constrained. gatekeeping this capacity with compulsory fee mechanisms or complex identity rituals is overkill.

but maybe the gatekeeping is why polkadot runs empty. 20k tps capacity, 0.3 tps usage. penumbra tried the opposite - $0.000009 withdrawal fees, no barriers - and also has 3 withdrawals per day. high friction or no friction, same result: empty chains. the answer isn’t at either extreme.

why shielded state needs utxo

account model: balance stored at address. transfer updates sender and receiver balances. simple. but every state transition is visible - balance before, balance after, delta computed trivially. “privacy” means hiding WHO transacted, not WHAT changed. that’s not privacy, it’s pseudonymity with extra steps.

utxo/note model: no balances. just unspent outputs. spending reveals a nullifier - a commitment to “this note is now spent” - without revealing WHICH note. observers see nullifiers appear but can’t link them to specific outputs. the anonymity set is all unspent notes, not just current transaction participants.

penumbra, zcash, aztec all use note-based models. not because utxo is elegant (it’s not - wallet complexity is real) but because account model fundamentally leaks state transitions. you can encrypt the amounts, but if i see your account touched in block N, i learned something. with notes, all i see is “someone spent something from the pool of all notes ever created.”

the state bloat problem

here’s what nobody talks about: shielded notes persist forever.

transparent utxo chains can prune spent outputs. once spent, the output is gone. state grows with UNSPENT outputs, not total history. but shielded chains can’t do this. the commitment tree

- the merkle structure proving notes exist - must keep every commitment ever made. why? because revealing which commitments correspond to spent notes would leak the nullifier-to-note mapping. that’s the whole secret.

penumbra has ~53 million um in shielded pool. that’s millions of notes in the commitment tree. every dust note from every split transaction. every change output. permanent state. the tree only grows.

this is why fees matter even in a world with computational proofs. spam protection gates WHO can submit. but it doesn’t address WHAT gets stored. without fees, users split notes into dust without penalty. convenient for privacy (more outputs = larger anonymity set) but unsustainable for state growth.

penumbra burns fees - more usage = scarcer supply. but at $0.000009 per withdrawal, the burn is decorative. 18 months of operation, ~75k um total burned - but most of that is dex arbitrage, not tx fees. actual transaction fees: ~420 um total. monthly issuance is ~33k um. the fee mechanism exists but doesn’t bite.

separating submission from persistence

the insight: rate-limiting and state-limiting are different problems. conflating them creates the adoption trap - make submission expensive to limit state, kill casual usage.

submission: computational proof

- ligerito proves 1M elements in 50ms

- bound to recent blockhash (no precomputation)

- browser does work silently, user clicks submit

- gates rate, not access - no upfront capital

persistence: identity OR economic stake

- shielded notes default to ephemeral

- pruned after N epochs unless renewed

- prove personhood -> permanent state allocation

- OR deposit collateral -> permanent until withdrawnfirst interaction is frictionless. your browser generates a proof, you submit, you get temporary shielded state. want permanence? prove you’re human or stake something.

for shielded pools: ephemeral notes solve dust. split your note into 100 pieces for privacy - fine, but they expire in 30 days unless consolidated or backed by identity. state growth becomes bounded by active users, not cumulative history.

the risk: ephemeral notes leak timing. if your note expires without renewal, observers learn something. but that’s opt-in - users who need long-term privacy will prove identity or pay. casual users get temporary privacy for free. different tiers for different needs.

computational proofs as spam protection

proof of personhood has real value in the ai era - maybe the last moment to establish it before synthetic video makes verification impossible. but slamming identity requirements on first interaction won’t bring adoption.

captcha worked because grandma could click traffic lights. computational proofs could hit the same ux - browser does work silently, user clicks submit, done. ligerito proves 1M elements in 50ms. binding proofs to recent blockhashes prevents precomputation - you can’t stockpile proofs, each one requires fresh work.

asymmetric proof/verify enables tiered access without paywalls. 1M parameter proof gets you 20% of network capacity. 16M parameters gets 30%. heavier compute, more access. no identity ritual, just cpu cycles. cpu-optimized proof params tied to recent blockhashes prevent gpu farm precompute.

proof of personhood for validator selection, computational proofs for spam, economic stake for permanent storage. different tools for different problems. the question is whether jam services can implement this tiered model or if it needs protocol-level support.

information economics

the deeper framing: fees should reflect information value, not resource consumption.

as more chains adopt shielded state as default, the privacy set grows. the larger the pool, the more valuable the information leaked by leaving it. withdrawals decrease the anonymity set and leak information - you’re extracting from the organism. deposits increase the set - you’re contributing. the asymmetry should match:

- deposits: free or subsidized. you’re adding to collective privacy.

- internal transfers: minimal. shuffling within the pool costs little.

- withdrawals: expensive. you’re leaking information and shrinking the set.

this isn’t about punishing exits. it’s pricing the externality. when you withdraw, everyone else’s privacy decreases slightly. when you deposit, everyone benefits. fee structure should reflect that.

all markets are information markets. consider poker: in a heads-up game, only you and your opponent see showdown hands. that information is private - and valuable. if you played against a known high-stakes player, the data about how they played specific hands becomes a tradeable asset. you could sell it. others would buy it to exploit patterns. the player themselves might contract you to keep the hand private. information has a price because information confers advantage.

now scale that intuition. hft front-runs your trades because they see order flow you don’t. recommendation engines know your preferences before you do. adtech tracks you across the web and sells access to your attention. humans have already lost this battle to algorithms. designing protocols around government compliance is extremely short-sighted - it optimizes for a specific adversary while ignoring the broader surveillance infrastructure already deployed against you.

the real threat isn’t regulators. it’s the information asymmetry baked into every interaction with systems that know more about you than you know about yourself. shielded state isn’t about hiding from governments - it’s about not feeding the machine. every transparent transaction trains the models. every public balance updates the graph. privacy is defense against algorithmic suppression, not just legal prosecution.

this shifts the mental model. we’re not building “compliant privacy” or “regulatory-friendly anonymity.” we’re building information sovereignty. the organism protects itself by making extraction expensive and contribution free.

the path to free usage

the optimistic take: we’re reaching scale where these systems can function sustainably as free to use - just like web2 apps. ligerito verifies in 2ms. polkavm jit runs at 1600 fps. the marginal cost per transaction approaches zero. verification is no longer the bottleneck.

the question shifts from “how do we charge per tx” to “who subsidizes infrastructure” - exactly how web2 works. premium tiers, institutional users, those who need permanent state - they cover the free tier. casual users get ephemeral shielded access for free. freemium model applied to privacy. the tech is finally there; the economics just need to catch up.

what would enable shielded apps

shielded protocols need deterministic finality. when you spend a note, you reveal a nullifier. if that block reverts, the nullifier was visible but the note is still spendable. linkability broken permanently. this isn’t theoretical - it’s why penumbra chose tendermint over polkadot.

the finality problem

tendermint: consensus happens before block production. propose → prevote → precommit → commit. 2/3 must agree at each stage or no block exists. you never see a block that might revert.

jam/polkadot: produce block first, finalize later with grandpa. faster block times, but creates a finality gap. safrole eliminates accidental forks, but equivocation and netsplits still cause reorgs before grandpa catches up.

tendermint’s constraint: every validator must see every other validator’s vote at each round. O(n²) messages, but the real limit is latency - three network round-trips per block. cosmos hub runs 180 validators; could push higher but finality time degrades. polkadot scales to 1000+ because grandpa finalizes chains, not individual blocks.

osst: scaling threshold agreement

osst removes tendermint’s scaling barrier. O(n) aggregation instead of O(n²) messaging:

- block producer proposes

- validators sign commitment independently (no coordination)

- aggregator collects 683+ contributions

- threshold signature proves 2/3 committed

same finality guarantee as tendermint. scales to 1000 validators.

grandpa’s multi-round protocol (prevote → precommit) with network round-trips becomes single-round

aggregation. accountability preserved - each contribution (index, commitment, response) is a

schnorr proof. same index in conflicting finality proofs = slashable equivocation.

jam’s deeper constraint

osst solves signature aggregation. but jam requires sharded re-execution - validators must verify work reports before signing finality. can’t finalize what you haven’t checked.

this is the actual blocker. not signing speed, but verification time.

execution proofs: removing re-execution

block producer proves correct execution once. validators verify the proof instead of re-executing.

ligerito verification: ~65ms at 2^24 elements. re-execution time scales linearly with computation. proof verification scales logarithmically - 2ms at 2^20, 65ms at 2^24. the gap widens as programs get larger.

the accidental computer shows how 2D polynomial commitment proves both data availability AND validity. same structure, both properties.

the complete stack

zoda (implementation): 2D reed-solomon where each shard proves valid encoding. one shard check = instant DA verification. jam’s 1D encoding can reconstruct to garbage if producer cheated. data overhead roughly equivalent (~3x expansion for both), but zoda gives immediate verification instead of optimistic trust.

osst: threshold signature aggregation. 683+ contributions → one 96-byte signature proving 2/3 finality. grandpa stores same data internally but has no compact external representation.

ligerito: execution proof. producer proves once, all validators verify in 65ms.

combined: light client samples one shard (~300kb), verifies DA instantly, verifies one threshold signature, done. milliseconds, not the 18 seconds smoldot users currently endure.

single-slot finality at 1000 validators. trustless light clients. shielded apps become possible.

we’ve implemented osst for ristretto255, pallas, secp256k1, and decaf377: zeratul/osst. reshare module derives new shares from consensus data each epoch - no trusted coordinator.

how ligerito works

the core insight: instead of re-running computation, create a short proof it ran correctly. verifier checks the proof in milliseconds instead of re-executing for seconds. million operations compress to 149kb. not hiding information - compressing verification.

how it actually works: imagine you ran a program for 40,000 steps. at each step, some things must be true: the program counter increments correctly, the opcode matches the binary, the alu computes 2+2=4 not 5. each of these is a constraint. correct execution means every constraint holds.

now here’s the trick. we encode all those constraints as a polynomial - a mathematical curve. if execution was correct, this polynomial equals zero everywhere it matters. if you cheated anywhere, the polynomial is non-zero somewhere.

verifier can’t check a million points. but they don’t need to. they pick ONE random point and ask “what’s the value here?” this is schwartz-zippel: a non-zero polynomial almost never happens to be zero at a random point. soundness error is d/|F| where d is polynomial degree and F is field size - for a 128-bit field, that’s negligible. one random check, cryptographic confidence.

the sumcheck protocol makes this efficient. prover and verifier play a game of random challenges. each round halves the problem. after log(n) rounds, checking a million constraints reduces to checking one point.

pcvm (polynomial commitment vm) records the execution trace. ligerito commits it as a polynomial - prover can’t change values after committing. memory gets authenticated via merkle trees.

this is a succinct argument of knowledge, not a privacy tool. no zk, no witness hiding - just proving execution happened correctly. for deterministic programs with public inputs, the witness is recomputable anyway - re-execution with jit is faster than proving. the value is block validity: producer proves once, validators verify cheaply. million elements proved in 57ms, verified in 2ms.

doom benchmarks (polkavm interpreter, 11.8M instructions/frame, 32 fps):

| size | elements | prove | verify | throughput |

|---|---|---|---|---|

| 2^20 | 1M | 57ms | 2ms | 18.4M/s |

| 2^24 | 16M | 893ms | 65ms | 18.8M/s |

| 2^28 | 256M | 17s | 1.8s | 15.8M/s |

100 frames burns 1.18B gas. 3 seconds gameplay at 32fps = 96 frames = 1.13B instructions. requires 2^31 polynomial.

at 2^24 we prove 1.4 doom frames in 893ms. without SIMD it was 5x slower. easy win from

-C target-cpu=native.

polkavm jit: 1600-1800 fps (standalone). proven execution: ~1.5 fps. 1000x gap.

worth it? depends on threat model:

- block validity: accidental computer approach. DA commitment encodes validity. 65ms verify at 2^24. block producer proves once, validators verify. almost free.

- private computation: user proves client-side. can’t expose inputs to block producer. 1000x overhead but happens user-side. still enough compute for useful applications. works best as a jam service, but requires deterministic finality to be practical.

binius64 takes a different approach. opcode-specific

circuits instead of generic traces. band one constraint, imul one constraint, rotr32

specialized rotation. 64x reduction vs bit-level. circuits compose primitives - sha256 uses band,

bxor, iadd_32, rotr_32. not generic trace recording.

we used traces for polkavm compatibility. prove existing riscv64em binaries without recompilation. opcode circuits require rewriting as binius64 circuits. tradeoff: generic but slower vs optimized but needs source access.

where this leaves us

jam improves polkadot for general compute. removes substrate complexity. synchronous composition. it’s a real vm. real world computer. for throughput applications it delivers. defi. gaming. data availability. jam works.

zoda should be in jam proper - 2D DA is strictly better than 1D for negligible overhead. that’s not experimental, it’s an upgrade.

for kusama i’d love to see a divergent consensus path on top of that. polkadot optimizes for validator count and throughput. kusama could optimize for finality latency and shielded apps. execution proofs via ligerito remove the re-execution bottleneck. osst removes the signing bottleneck. combine them and single-slot finality becomes possible - exactly what shielded protocols need. different networks, different tradeoffs. kusama as the privacy-first experimental chain would be worth the divergence.

we recently patched penumbra to compute client-side groth16 proofs using parallel wasm and simd128. turned out the bottleneck isn’t computation but loading the circuit binary into memory. once cached, proving parallelizes across cores. the holy grail is agentic apps where users hold their own state and the chain just verifies transitions. jam could be the execution layer. but it needs finality guarantees first, and sustainable state economics - ephemeral notes with tiered persistence could make shielded pools viable without unbounded commitment tree growth.

true web3 is agentic computation. users hold their own state. chain verifies transitions. current status quo: complete state exists publicly, eventually used against you as permanent record. every transaction visible forever. every balance queryable. every interaction logged. that’s not sovereignty. i find it increasingly hard to contribute to a permanent public ledger.

sources:

- jam graypaper

- elves paper

- osst paper

- osst crate

- reshare

- zoda paper

- zoda implementation

- celestia fibre

- commonware monorepo

- accidental computer

- ligerito

- binius64

- pcvm

- romio

- jam-netadapter

- smoldot

- jamt tracing

- gfw quic blocking